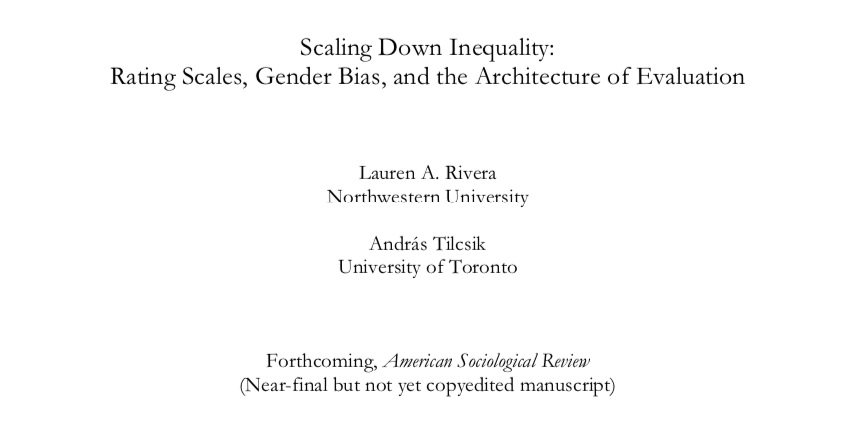

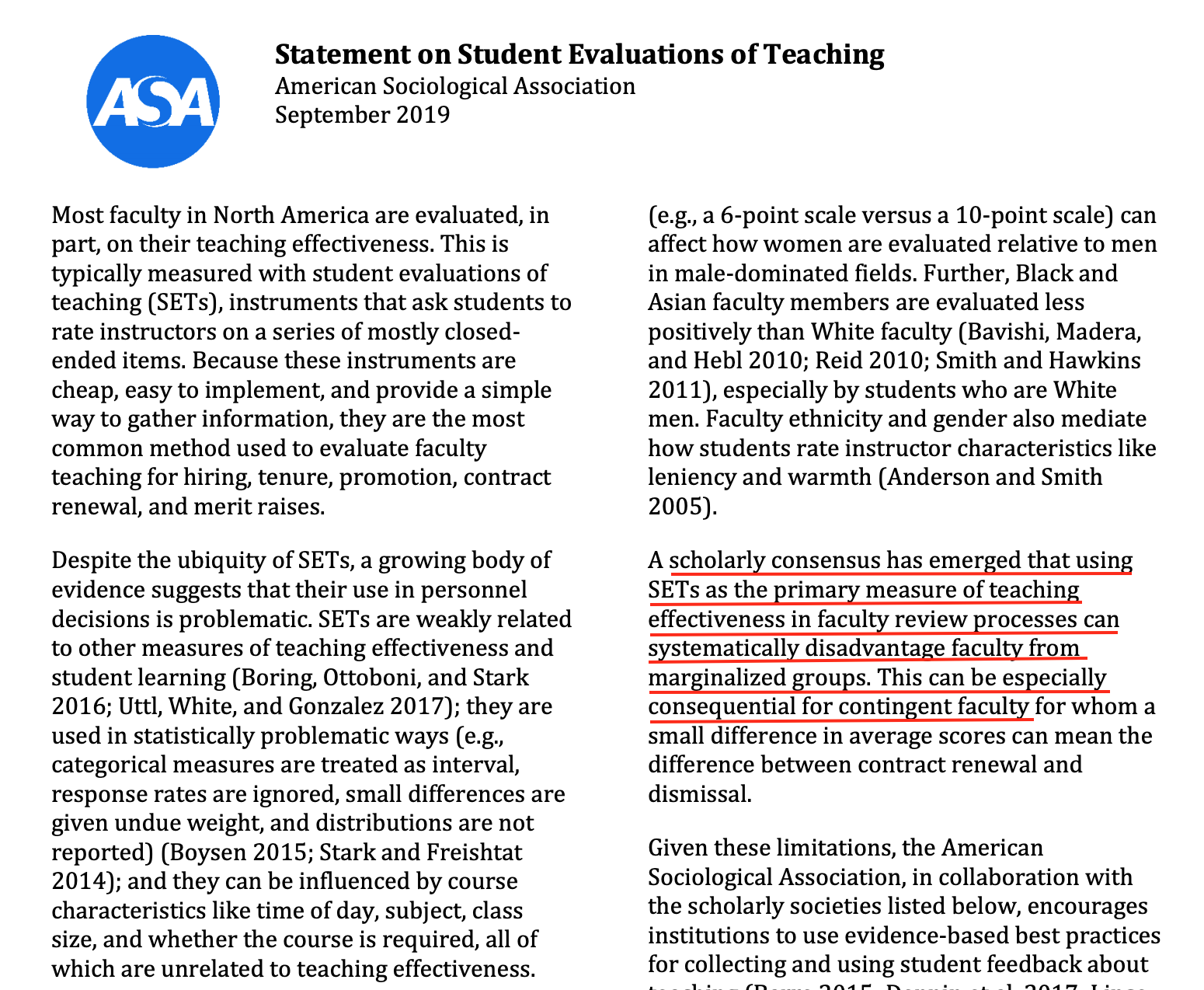

class: blueBack ## Student Evaluations of Teaching ### Canadian Statistical Sciences Institute #### Philip B. Stark, UC Berkeley #### 11 May 2022 #### Joint work with Anne Boring, Richard Freishtat, Amanda Glazer, Kellie Ottoboni --- <br /> <br /> .framed.center.large.blue.vcenter[***The truth will set you free, but first it will piss you off.***] <br /> .align-right.medium[—Gloria Steinem] --- <br /> <br /> .framed.center.large.blue.vcenter[***When a measure becomes a target, it ceases to be a good measure.***] <br/> .align-right.medium[—Goodhart's Law (via Marilyn Strathern)] --- ### Student Evaluations of Teaching (SET) + most common method to evaluate teaching + used for hiring, firing, tenure, promotion + simple, cheap, fast to administer -- ### .blue[What do they measure?] --- .center.vcenter.large[Part I: Basic Statistics] --- ### Nonresponse -- + SET surveys are an incomplete census, not a random sample. -- + Suppose 70% of students respond, with an average of 4 on a 7-point scale. -- .red[The class average could be anywhere between 3.1 & 4.9] -- + In general, if the response rate is _f_ and the scale runs from _a_ to _b_, the uncertainty is _(1-f)(b-a)_. -- + "Margin of error" meaningless: not a random sample --- ### All our arithmetic is below average + Does a 3 mean the same thing to every student—even approximately? -- + Does a 5 mean the same thing in an upper-division architecture studio, a required freshman Econ course with 500 students, or an online Statistics course with no face-to-face interaction? -- + Is the difference between 1 & 2 the same as the difference between 5 & 6? -- + Does a 1 balance a 7 to make two 4s? -- + what about variability? - (1+1+7+7)/4 = (2+3+5+6)/4 = (1+5+5+5)/4 = (4+4+4+4)/4 = 4 - Polarizing teacher ≠ teacher w/ mediocre ratings - 3 statisticians go deer hunting … --- ### What does the mean mean? .blue[Averages makes sense for interval scales, not ordinal scales like SET.] -- .red[Averaging SET doesn't make sense.] -- Doesn't make sense to compare average SET across: + courses + instructors + levels + types of classes + modes of instruction + disciplines -- Shouldn't ignore variability or nonresponse. --- ### Same issues in averaging self-selected Likert scale responses elsewhere: + reviews of physicians + online product ratings + Yelp reviews --- .center.large.blue.vcenter[***Quantifauxcation***] ### Assign a meaningless number, then conclude that because it's numerical, it's meaningful. -- (And if the number has 8 digits, they all matter.) --- .center.vcenter.large[Part II: Science, Sciencism] ---  > If you can't prove what you want to prove, demonstrate something else and pretend they are the same thing. In the daze that follows the collision of statistics with the human mind, hardly anyone will notice the difference. .align-right[Darrell Huff] -- <br /> .blue[Hard to measure teaching effectiveness, so instead we measure student opinion (poorly) and pretend they are the same thing.] --- ## What's effective teaching? -- + Should facilitate learning -- + Grades usually not a good proxy for learning -- + Students generally can't judge how much they learned -- + Serious problems with confounding --  https://xkcd.com/552/ --- ### Lauer, 2012. Survey of 185 students, 45 faculty at Rollins College, FL Faculty & students don't mean the same thing by "fair," "professional," "organized," "challenging," & "respectful" -- <table> <tr><th> <em>not fair</em> means …</th><th>student %</th><th>instructor %</th></tr> <tr><td>plays favorites</td> <td>45.8</td> <td>31.7</td></tr> <tr><td>grading problematic</td> <td>2.3 </td> <td>49.2</td></tr> <tr><td>work is too hard</td> <td>12.7</td> <td>0</td></tr> <tr><td>won't "work with you" on problems</td> <td>12.3</td> <td>0</td></tr> <tr><td>other</td> <td>6.9</td> <td>19</td></tr> </table> --- <img src="./SetPics/lawLang.png" height="600px" /> --- <img src="./SetPics/hamermeshParker05.png" height="600px" /> --- <img src="./SetPics/babinEtal20.png" height="600px" /> --- <img src="./SetPics/wolbringRiordan16.png" height="600px" /> --- <img src="./SetPics/genderLang.png" height="600px" /> [Ben Schmidt](http://benschmidt.org/profGender/#%7B%22database%22%3A%22RMP%22%2C%22plotType%22%3A%22pointchart%22%2C%22method%22%3A%22return_json%22%2C%22search_limits%22%3A%7B%22word%22%3A%5B%22cold%22%5D%2C%22department__id%22%3A%7B%22%24lte%22%3A25%7D%7D%2C%22aesthetic%22%3A%7B%22x%22%3A%22WordsPerMillion%22%2C%22y%22%3A%22department%22%2C%22color%22%3A%22gender%22%7D%2C%22counttype%22%3A%5B%22WordsPerMillion%22%5D%2C%22groups%22%3A%5B%22department%22%2C%22gender%22%5D%2C%22testGroup%22%3A%22A%22%7D) Chili peppers clearly matter for teaching effectiveness. --- #### "She does have an accent, but … " [Subtirelu 2015](doi:10.1017/S0047404514000736) <img src="./SetPics/subtirelu15.png" height="600px" /> --- <img src="./SetPics/ambadyRosenthal93.png" height="600px" /> --- <img src="./SetPics/arbuckleWilliams03.png" height="600px" /> --- <img src="./SetPics/chisadzaEtal19.png" height="600px" /> --- <img src="./SetPics/uttlEtal16.png" height="600px" /> --- <img src="./SetPics/carrellWest08.png" height="600px" /> --- <img src="./SetPics/bragaEtal11.png" height="600px" /> --- <img src="./SetPics/keng17.png" height="600px" alt="Keng 2017" /> --- <img src="./SetPics/stroebe16.png" height="600px" /> --- Wagner et al., 2016 <img src="./SetPics/wagnerEtal16.png" height="600px" /> --- **64% of students** gave answers contradicting what they had demonstrated they knew was true. <img src="./SetPics/stanfel95.png" height="550px" /> --- <img src="./SetPics/stanfel-2-95.png" height="600px" /> --- <img src="./SetPics/hesslerEtal18-4.png" height="600px" /> --- The number of points on the rating scale affects gender differences  --- <img src="./SetPics/ocufa19-1.png" height="600px" /> --- <img src="./SetPics/ocufa19-2.png" height="600px" /> --- <img src="./SetPics/macnellEtal15.png" height="600px" /> --- .left-column[ [MacNell, Driscoll, & Hunt, 2014](http://link.springer.com/article/10.1007/s10755-014-9313-4) NC State online course. Students randomized into 6 groups, 2 taught by primary prof, 4 by GSIs. 2 GSIs: 1 male, 1 female. GSIs used actual names in 1 section, swapped names in 1 section. 5-point scale. ] .right-column[ .small[ <table> <tr><th> Characteristic</th> <th>M - F</th> <th>perm \(P\)</th> <th>t-test \(P\)</th></tr> <tr><td>Overall </td><td> 0.47 </td><td> 0.12 </td><td> 0.128 </td></tr> <tr><td>Professional </td><td> 0.61 </td><td style="background-color:Khaki"> 0.07 </td><td> 0.124 </td></tr> <tr><td>Respectful </td><td> 0.61 </td><td style="background-color:Khaki"> 0.06 </td><td> 0.124 </td></tr> <tr><td>Caring </td><td> 0.52 </td><td style="background-color:Khaki"> 0.10 </td><td style="background-color:Khaki"> 0.071 </td></tr> <tr><td>Enthusiastic </td><td> 0.57 </td><td style="background-color:Khaki"> 0.06 </td><td> 0.112 </td></tr> <tr><td>Communicate </td><td> 0.57 </td><td style="background-color:Khaki"> 0.07 </td><td> NA </td></tr> <tr><td>Helpful </td><td> 0.46 </td><td> 0.17 </td><td style="background-color:yellow"> 0.049 </td></tr> <tr><td>Feedback </td><td> 0.47 </td><td> 0.16 </td><td style="background-color:Khaki"> 0.054 </td></tr> <tr><td>Prompt </td><td> 0.80 </td><td style="background-color:yellow"> 0.01 </td><td> 0.191 </td></tr> <tr><td>Consistent </td><td> 0.46 </td><td> 0.21 </td><td style="background-color:yellow"> 0.045 </td></tr> <tr><td>Fair </td><td> 0.76 </td><td style="background-color:yellow"> 0.01 </td><td> 0.188 </td></tr> <tr><td>Responsive </td><td> 0.22 </td><td> 0.48 </td><td style="background-color:yellow"> 0.013 </td></tr> <tr><td>Praise </td><td> 0.67 </td><td style="background-color:yellow"> 0.01 </td><td> 0.153 </td></tr> <tr><td>Knowledge </td><td> 0.35 </td><td> 0.29 </td><td style="background-color:yellow"> 0.038 </td></tr> <tr><td>Clear </td><td> 0.41 </td><td> 0.29 </td><td> NA </td></tr> </table> ] ] --- R.A. Fisher, 1935 <img src="./ReproPics/fisher_t_permute_1.png" height="600px" /> --- <img src="./ReproPics/fisher_t_permute_2.png" height="600px" /> --- + Two centers, A and B. + 4 units per center, randomized 2 to treatment and 2 to control + Response is \\(a\\) for control in A, \\(a+1\\) for treatment in A. Ditto for B. -- + Permutation P value is \\(1/{{4}\choose{2}}^2 = 1/36 \approx 0.029 \\) -- + Student's T statistic for \\(b-a=10\\) is \\(1/\sqrt(100/6) \approx 0.2449\\); one-sided P-value 0.41 --- ### Exam performance and instructor gender Mean grade and instructor gender (male minus female) <table> <tr><th> </th><th> difference in means </th><th> \(P\)-value </th></tr> <tr><td> Perceived </td><td> 1.76 </td><td> 0.54 </td></tr> <tr><td> Actual </td><td> -6.81 </td><td style="background-color:yellow"> 0.02 </td></tr> </table> --- <img src="./SetPics/uttlViolo21.png" height="600px" /> --- <img src="./SetPics/khazanEtal20.png" height="600px" /> --- <img src="./SetPics/khazanEtal20-2.png" height="600px" /> --- + Apparent effect sizes smaller than in the MacNell et al. + Treatment assignment not randomized: assigned alphabetically. + Used "Jesse" as the male name; generally considered "non-gendered" --- + Omnibus NPC permutation test for MacNell et al. data gives \\(P < 1.0e-5\\). -- + Omnibus NPC pertmutation test for Khazan et al. gives \\(P = 0.6845\\), pretending "alphabetical" = "random" -- + Fisher's combining function gives \\(P < 8.825e-05\\): Khazan et al. would slightly decrease evidence against the null. --- ### "Natural experiment": Boring et al., 2016. + 5 years of data for 6 mandatory freshman classes at SciencesPo:<br />History, Political Institutions, Microeconomics, Macroeconomics, Political Science, Sociology -- + 23,001 SET, 379 instructors, 4,423 students, 1,194 sections (950 without PI), 21 year-by-course strata -- + response rate ~100% + anonymous finals except PI + interim grades before final -- #### Test statistics (for stratified permutation test) + Correlation between SET and gender within each stratum, averaged across strata + Correlation between SET and average final exam score within each stratum, averaged across strata + Could have used, e.g., correlation within strata, combined with Fisher's function --- ### SciencesPo Average correlation between SET and final exam score <table> <tr><th> </th><th> strata </th><th> \(\bar{\rho}\) </th><th> \(P\) </th></tr> <tr><td>Overall </td><td> 26 (21) </td><td> 0.04 </td><td> 0.09 </td></tr> <tr><td>History </td><td> 5 </td><td> 0.16 </td><td style="background-color:yellow"> 0.01 </td></tr> <tr><td>Political Institutions </td><td> 5 </td><td> N/A </td><td> N/A </td></tr> <tr><td>Macroeconomics </td><td> 5 </td><td> 0.06 </td><td> 0.19 </td></tr> <tr><td>Microeconomics </td><td> 5 </td><td> -0.01 </td><td> 0.55 </td></tr> <tr><td>Political science </td><td> 3 </td><td> -0.03 </td><td> 0.62 </td></tr> <tr><td>Sociology </td><td> 3 </td><td> -0.02 </td><td> 0.61 </td></tr> </table> --- Average correlation between SET and instructor gender <table> <tr><th> </th><th> \(\bar{\rho}\) </th><th> \(P\) </th></tr> <tr><td>Overall </td><td> 0.09 </td><td style="background-color:yellow"> 0.00 </td></tr> <tr><td>History </td><td> 0.11 </td><td> 0.08 </td></tr> <tr><td>Political institutions </td><td> 0.11 </td><td> 0.10 </td></tr> <tr><td>Macroeconomics </td><td> 0.10 </td><td> 0.16 </td></tr> <tr><td>Microeconomics </td><td> 0.09 </td><td> 0.16 </td></tr> <tr><td>Political science </td><td> 0.04 </td><td> 0.63 </td></tr> <tr><td>Sociology </td><td> 0.08 </td><td> 0.34 </td></tr> </table> --- Average correlation between final exam scores and instructor gender <table> <tr><th> </th><th> \(\bar{\rho}\) </th><th> \(P\) </th></tr> <tr><td>Overall </td><td> -0.06 </td><td> 0.07 </td></tr> <tr><td>History </td><td> -0.08 </td><td> 0.22 </td></tr> <tr><td>Macroeconomics </td><td> -0.06 </td><td> 0.37 </td></tr> <tr><td>Microeconomics </td><td> -0.06 </td><td> 0.37 </td></tr> <tr><td>Political science </td><td> -0.03 </td><td> 0.70 </td></tr> <tr><td>Sociology </td><td> -0.05 </td><td> 0.55 </td></tr> </table> --- Average correlation between SET and interim grades <table> <tr><th> </th><th> \(\bar{\rho}\) </th><th> \(P\) </th></tr> <tr><td>Overall </td><td> 0.16 </td><td style="background-color:yellow"> 0.00 </td></tr> <tr><td>History </td><td> 0.32 </td><td style="background-color:yellow"> 0.00 </td></tr> <tr><td>Political institutions </td><td> -0.02 </td><td> 0.61 </td></tr> <tr><td>Macroeconomics </td><td> 0.15 </td><td style="background-color:yellow"> 0.01 </td></tr> <tr><td>Microeconomics </td><td> 0.13 </td><td style="background-color:yellow"> 0.03 </td></tr> <tr><td>Political science </td><td> 0.17 </td><td style="background-color:yellow"> 0.02 </td></tr> <tr><td>Sociology </td><td> 0.24 </td><td style="background-color:yellow"> 0.00 </td></tr> </table> --- ### Who supports SET? .framed[ .blue[ >> It is difficult to get a man to understand something, when his salary depends upon his not understanding it! —Upton Sinclair ] ] --- <img src="./SetPics/uttlEtal19.png" height="600px" /> --- + Are SET more sensitive to effectiveness or to something else? + Do comparably effective women and men get comparable SET? + But for their gender, would women get higher SET than they do? (And but for their gender, would men get lower SET than they do?) -- .blue[Need to compare like teaching with like teaching, not an arbitrary collection of women with an arbitrary collection of men.] -- Boring (2014) finds _costs_ of increasing SET very different for men and women. --- ## These are not the only biases! + Ethnicity and race + Age + Attractiveness + Accents / non-native English speakers + "Halo effect" + … --- ## What do SET measure? - .blue[strongly correlated with students' grade expectations]<br /> Boring et al., 2016; Johnson, 2003; Marsh & Cooper, 1980; Short et al., 2008; Worthington, 2002 -- - .blue[strongly correlated with enjoyment] Stark, unpublished, 2012. 1486 students<br /> Correlation btw instructor effectiveness & enjoyment: 0.75.<br /> Correlation btw course effectiveness & enjoyment: 0.8. -- - .blue[correlated with instructor gender, ethnicity, attractiveness, & age]<br /> Anderson & Miller, 1997; Ambady & Rosenthal, 1993; Arbuckle & Williams, 2003; Basow, 1995; Boring, 2017; Boring et al., 2016; Chisadza et al. 2019; Cramer & Alexitch, 2000; Marsh & Dunkin, 1992; MacNell et al., 2014; Wachtel, 1998; Wallish & Cachia, 2018; Weinberg et al., 2007; Worthington, 2002 -- - .blue[omnibus, abstract questions about curriculum design, effectiveness, etc., most influenced by factors unrelated to learning]<br /> Worthington, 2002 -- - .red[SET are not very sensitive to effectiveness: weak and/or negative association] -- - .red[Calling something "teaching effectiveness" does not make it so] -- - .red[Computing averages to 2 decimals doesn't make them reliable] --- .center.vcenter.large[Part V: What to do, then?] --- ### What might we be able to discover about teaching? .looser[ + Is she dedicated to and engaged in her teaching? + Is she available to students? + Is she putting in appropriate effort? Is she creating new materials, new courses, or new pedagogical approaches? + Is she revising, refreshing, and reworking existing courses using feedback and on-going experiment? + Is she helping keep the department's curriculum up to date? + Is she trying to improve? + Is she contributing to the college's teaching mission in a serious way? + Is she supervising undergraduates for research, internships, and honors theses? + Is she advising and mentoring students? + Do her students do well when they graduate? ] --- ## Principles for SET items + students' subjective experience of the course (e.g., did the student find the course challenging?) + personal observations (e.g., was the instructor’s handwriting legible?) + avoid abstract questions, omnibus questions, and questions requiring judgment, because they are particularly subject to biases related to instructor gender, age, and other characteristics protected by employment law + avoid questions that require evaluative judgments (e.g., how effective was the instructor?) + focus on things that affect learning and the learning experience + focus on things the instructor has control over + focus on things that can inform better pedagogy + multiple-choice items generally should provide free-form space to explain choice + **interpret responses cautiously** --- ### New bad items + The instructor created an inclusive environment consistent with the university's diversity goals. + The structure of the course helped me learn. --- #### Litigation + Union arbitration in Canada: Newfoundland (Memorial U.), Ontario (OCUFA, Ryerson U.), Toronto + Civil litigation in US: Ohio (Miami U.), Vermont, Nevada + Union arbitration and grievances in US: Florida (UFF, U. Florida), California (UCB) + Discussions with several attorneys who want to pursue class actions --- ### Progress + USC, University of Oregon, Colorado State University, Ontario + UC Berkeley: Division of Mathematical and Physical Sciences  --- #### References + Ambady, N., and R. Rosenthal, 1993. Half a Minute: Predicting Teacher Evaluations from Thin Slices of Nonverbal Behavior and Physical Attractiveness, _J. Personality and Social Psychology_, _64_, 431-441. + Arbuckle, J. and B.D. Williams, 2003. Students' Perceptions of Expressiveness: Age and Gender Effects on Teacher Evaluations, _Sex Roles_, _49_, 507-516. DOI 10.1023/A:1025832707002 + Archibeque, O., 2014. Bias in Student Evaluations of Minority Faculty: A Selected Bibliography of Recent Publications, 2005 to Present. http://library.auraria.edu/content/bias-student-evaluations-minority-faculty (last retrieved 30 September 2016) + Basow, S., S. Codos, and J. Martin, 2013. The Effects of Professors' Race and Gender on Student Evaluations and Performance, _College Student Journal_, _47_ (2), 352-363. + Blair-Loy, M., E. Rogers, D. Glaser, Y.L.A. Wong, D. Abraham, and P.C. Cosman, 2017. Gender in Engineering Departments: Are There Gender Differences in Interruptions of Academic Job Talks?, _Social Sciences_, _6_, doi:10.3390/socsci6010029 + Boring, A., 2015. Gender Bias in Student Evaluations of Teachers, OFCE-PRESAGE-Sciences-Po Working Paper, http://www.ofce.sciences-po.fr/pdf/dtravail/WP2015-13.pdf (last retrieved 30 September 2016) + Boring, A., K. Ottoboni, and P.B. Stark, 2016. Student Evaluations of Teaching (Mostly) Do Not Measure Teaching Effectiveness, _ScienceOpen_, DOI 10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1 + Braga, M., M. Paccagnella, and M. Pellizzari, 2014. Evaluating Students' Evaluations of Professors, _Economics of Education Review_, _41_, 71-88. + Carrell, S.E., and J.E. West, 2010. Does Professor Quality Matter? Evidence from Random Assignment of Students to Professors, _J. Political Economy_, _118_, 409-432. + Chisadza, C., N. Nicholls, and E. Yitbarek, 2019. Race and Gender biases in Student Evaluations of Teachers, _Economics Letters, 179_, 66-71, DOI 10.1016/j.econlet.2019.03.022. --- + Fan Y., L.J. Shepherd, E. Slavich, D. Waters, M. Stone, R. Abel, and E.L. Johnston, 2019. Gender and cultural bias in student evaluations: Why representation matters. _PLoS ONE, 14_, e0209749. https://doi.org/10.1371/journal.pone.0209749 + Feeley, T.H., 2002. Evidence of Halo Effects in Student Evaluations of Communication Instruction, _Communication Education_, _51_:3, 225-236, DOI: 10.1080/03634520216519 + Hill, M.C., and K.K. Epps, 2010. The Impact of Physical Classroom Environment on Student Satisfaction and Student Evaluation of Teaching in the University Environment, _Academy of Educational Leadership Journal_, _14_, 65. + Hamermesh, D. S., and A. Parker, 2004. Beauty in the classroom: Instructors' pulchritude and putative pedagogical productivity. _Economics of Education Review_, _24_(4), 369-376. https://www.sciencedirect.com/science/article/abs/pii/S0272775704001165 + Hessler, M., D.M. Pöpping, H. Hollstein, H. Ohlenburg, P.H. Arnemann, C. Massoth, L.M. Seidel, A. Zarbock & M. Wenk, 2018. Availability of cookies during an academic course session affects evaluation of teaching, _Medical Education, 52_, 1064–1072. doi: 10.1111/medu.13627 + Hornstein, H.A., 2017. Student evaluations of teaching are an inadequate assessment tool for evaluating faculty performance, _Cogent Education_, _4_ 1304016 http://dx.doi.org/10.1080/2331186X.2017.1304016 + Johnson, V.E., 2003. _Grade Inflation: A Crisis in College Education_, Springer-Verlag, NY, 262pp. + Kaatz, A., B. Gutierrez, and M. Carnes, 2014. Threats to objectivity in peer review: the case of gender, _Trends in Pharmacological Science_, _35_, 371–373. doi:10.1016/j.tips.2014.06.005 + Keng, S.-H., 2017. Tenure system and its impact on grading leniency, teaching effectiveness and student effort, _Empirical Economics_, DOI 10.1007/s00181-017-1313-7 + Khazan, E., J. Borden, S. Johnson, and L. Greenhaw, 2020. Examining Gender Bias in Student Evaluation of Teaching for Graduate Teaching Assistants, NACTA. + Lake, D.A., 2001. Student Performance and Perceptions of a Lecture-based Course Compared with the Same Course Utilizing Group Discussion. _Physical Therapy_, _81_, 896-902. --- + Lauer, C., 2012. A Comparison of Faculty and Student Perspectives on Course Evaluation Terminology, in _To Improve the Academy: Resources for Faculty, Instructional, and Educational Development_, _31_, J.E. Groccia and L. Cruz, eds., Jossey-Bass, 195-211. + Lee, L.J., M.E. Connolly, M.H. Dancy, C.R. Henderson, and W.M. Christensen, 2018. A comparison of student evaluations of instruction vs. students' conceptual learning gains, _American Journal of Physics_, _86_, 531. DOI 10.1119/1.50393300 + MacNell, L., A. Driscoll, and A.N. Hunt, 2015. What's in a Name: Exposing Gender Bias in Student Ratings of Teaching, _Innovative Higher Education_, _40_, 291-303. DOI 10.1007/s10755-014-9313-4 + Madera, J.M., M.R. Hebl, and R.C. Martin, 2009. Gender and Letters of Recommendation for Academia: Agentic and Communal Differences, _Journal of Applied Psychology_, _94_, 1591–1599 DOI: 10.1037/a0016539 + Marsh, H.W., and T. Cooper. 1980. Prior Subject Interest, Students Evaluations, and Instructional Effectiveness. Paper presented at the annual meeting of the American Educational Research Association. + Marsh, H.W., and L.A. Roche. 1997. Making Students' Evaluations of Teaching Effectiveness Effective. _American Psychologist_, _52_, 1187-1197 + Mengl, F., J. Sauermann, and U. Zölitz, 2018. Gender Bias in Teaching Evaluations. _Journal of the European Economic Association_, jvx057, DOI 10.1093/jeaa/jvx057 + Moss-Racusin, C.A., J.F. Dovidio, V.L. Brescoll, M.J. Graham, and J. Handelsman, 2012. Science faculty's subtle gender biases favor male students, _PNAS_, _109_, 16474–16479. www.pnas.org/cgi/doi/10.1073/pnas.1211286109 + Nilson, L.B., 2012. Time to Raise Questions about Student Ratings, in _To Improve the Academy: Resources for Faculty, Instructional, and Educational Development_, _31_, J.E. Groccia and L. Cruz, eds., Jossey-Bass, 213-227. + Ontario Confederation of University Faculty Associations, 2019. Report of the OCUFA on Student Questionnaires on Courses and Teaching Working Group, https://ocufa.on.ca/assets/OCUFA-SQCT-Report.pdf --- + Reuben, E., P. Sapienza, and L. Zingales, 2014. How stereotypes impair women’s careers in science, _PNAS_, _111_, 4403–4408. www.pnas.org/cgi/doi/10.1073/pnas.1314788111 + Rivera, L. and A. Tilcsik. 2019. Scaling Down Inequality: Rating Scales, Gender Bias, and the Architecture of Evaluation, _American Sociological Review_, in press. + Schmader, T., J. Whitehead, and V.H. Wysocki, 2007. A Linguistic Comparison of Letters of Recommendation for Male and Female Chemistry and Biochemistry Job Applicants, _Sex Roles_, _57_, 509–514. doi:10.1007/s11199-007-9291-4 + Short, H., Boyle, R., Braithwaite, R., Brookes, M., Mustard, J., & Saundage, D. (2008, July). A comparison of student evaluation of teaching with student performance. In OZCOTS 2008: Proceedings of the 6th Australian Conference on Teaching Statistics (pp. 1-10). OZCOTS + Sarsons, 2015. Gender Differences in Recognition for Group Work. http://scholar.harvard.edu/files/sarsons/files/gender_groupwork.pdf?m=1449178759 + Schmidt, B., 2015. Gendered Language in Teacher Reviews, http://benschmidt.org/profGender (last retrieved 30 September 2016) + Stanfel, L.E., 1995. Measuring the Accuracy of Student Evaluations of Teaching. _Journal of Instructional Psychology_, _22_(2), 117-125. + Stark, P.B., and R. Freishtat, 2014. An Evaluation of Course Evaluations, _ScienceOpen_, DOI 10.14293/S2199-1006.1.SOR-EDU.AOFRQA.v1 + Stroebe, W., 2016. Why Good Teaching Evaluations May Reward Bad Teaching: On Grade Inflation and Other Unintended Consequences of Student Evaluations, _Perspectives on Psychological Science_, _11_ (6) 800–816, DOI: 10.1177/1745691616650284 + Subtirelu, N.C., 2015. "She does have an accent but…": Race and language ideology in students' evaluations of mathematics instructors on RateMyProfessors.com, _Language in Society_, _44_, 35-62. DOI 10.1017/S0047404514000736 --- + Uttl, B., C.A. White, and A. Morin, 2013. The Numbers Tell it All: Students Don't Like Numbers!, _PLoS ONE_, _8_ (12): e83443, DOI 10.1371/journal.pone.0083443 + Uttl, B., C.A. White, and D.W. Gonzalez, 2016. Meta-analysis of Faculty's Teaching Effectiveness: Student Evaluation of Teaching Ratings and Student Learning Are Not Related, _Studies in Educational Evaluation_, DOI: 0.1016/j.stueduc.2016.08.007 + Uttl B., K. Cnudde, NS C.A. White, 2019. Conflict of interest explains the size of student evaluation of teaching and learning correlations in multisection studies: a meta-analysis. PeerJ 7:e7225 https://doi.org/10.7717/peerj.7225 + Wagner, N., M. Rieger, and K. Voorvelt, 2016. International Institute of Social Studies Working Paper 617. + Wallish, P. and J. Cachia, 2018. Are student evaluations really biased by gender? Nope, they're biased by "hotness." _Slate_, slate.com/technology/2018/04/hotness-affects-student-evaluations-more-than-gender.html + Witteman, H., M. Hendricks, S. Straus, and C. Tannenbaum, 2018. Female grant applicants are equally successful when peer reviewers assess the science, but not when they assess the scientist. https://www.biorxiv.org/content/early/2018/01/19/232868 + Wolbring, T. and P. Riordan, 2016. How beauty works. Theoretical mechanisms and two empirical applications on students' evaluation of teaching, _Social Science Research_, _57_, 253–272