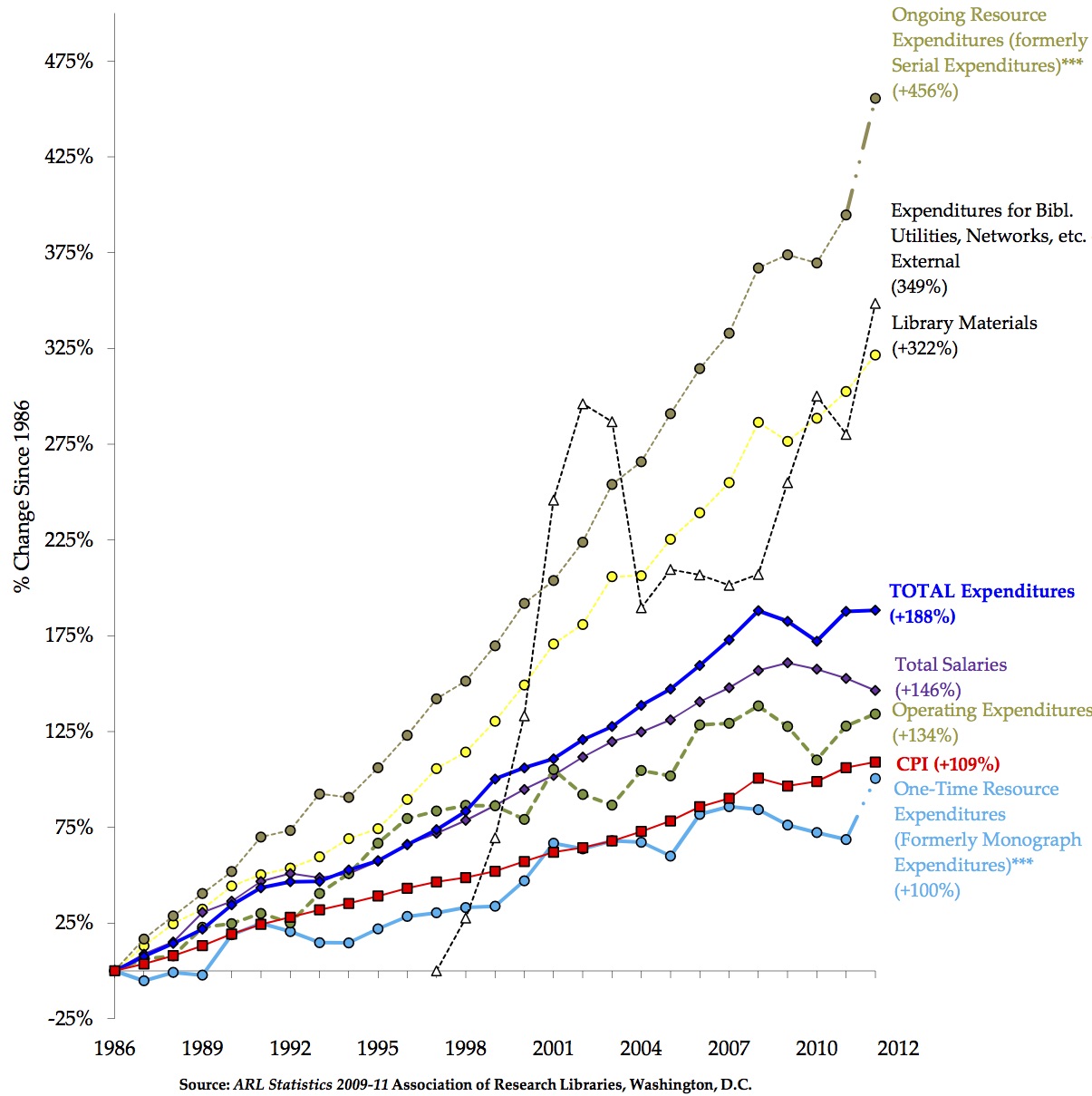

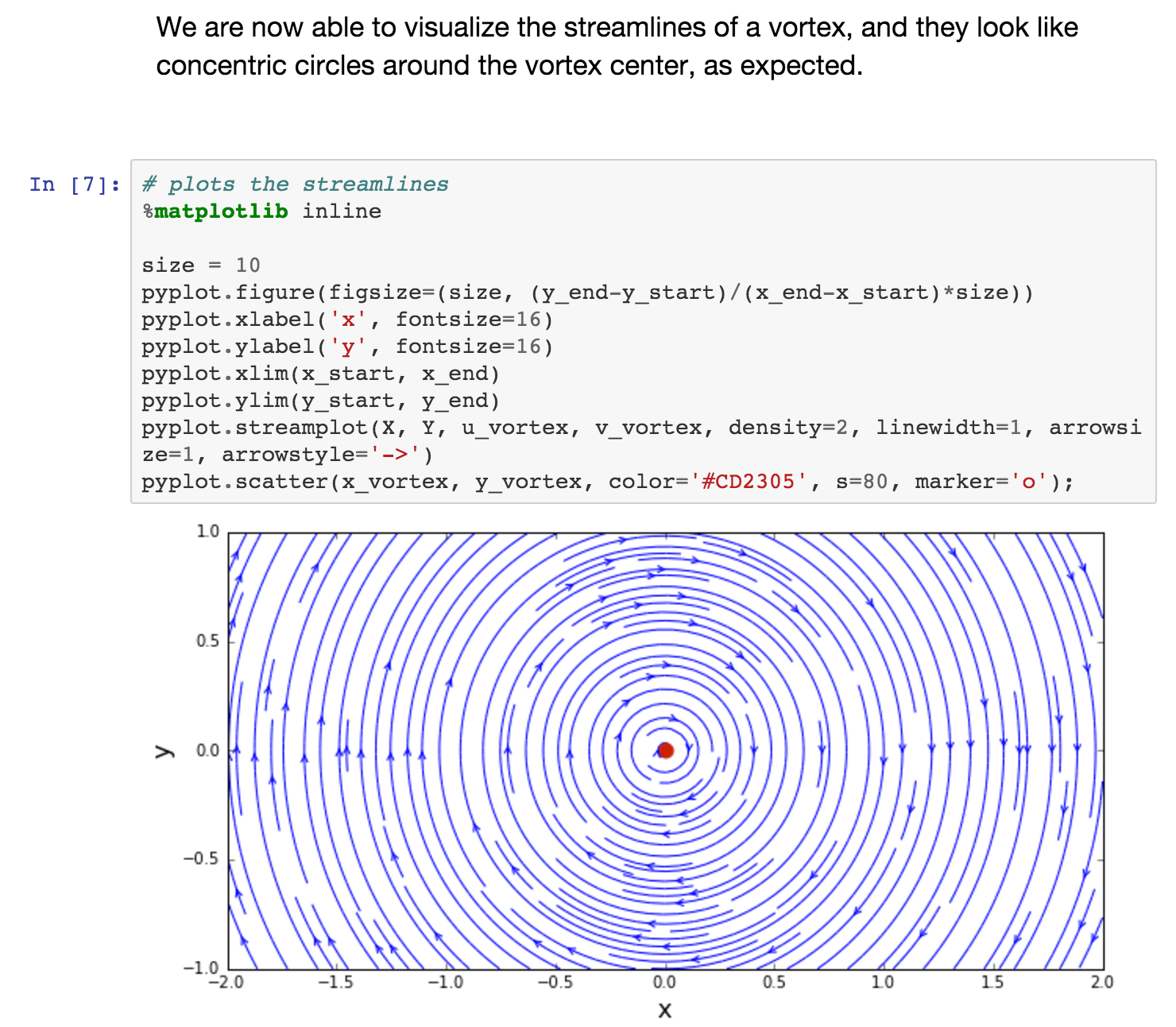

class:textShadow ## A Noob's Guide to Reproducibility <br />(& Open Science) ## Philip B. Stark ### http://www.stat.berkeley.edu/~stark ### Nuclear Engineering / BIDS / BITSS Seminar<br /> UC Berkeley<br />25 January 2016 --- ## Disclaimers and Credits -- .blue[I'm the noob.] -- Much owed to: + Fernando Perez, Jarrod Millman, Aaron Culich, et al. for computational reproducibility + Yoav Benjamini for statistical reproducibility + Lorena Barba for reproducibility in teaching --- + What does it mean to work reproducibly and transparently? + Why bother? + Whom does it benefit, and how? + What will it cost me? + What work habits will I need to change? + Will I need to learn new tools? + What resources help? + What's the simplest thing I can do to make my work more reproducible? + How can I move my discipline, my institution, and science as a whole towards reproducibility? --- ## Reproducibility in a nutshell: -- ### .blue.center[Show your work.] -- Provide evidence that you are right, not just a claim. --- ## Science is _show me_, not _trust me_ -- For current science, journals no longer provide complete-enough descriptions, just advertisements. -- .blue[Goal: enable others to check whether your tables and figures result from doing what you said to the data you said you did it to.] -- + Verbal description + Math + Data + Code + Figures & tables --- ## Why? My top reasons: 1. Others can check my work and correct it if it's wrong. 2. Others can re-use and extend my work more easily. -- .blue[_Others_ includes me, next week.] --- ## Why not? .left-column[ ### .red[Obstacles & Excuses] ] .right-column[ + time & effort + no direct academic credit + viewed as unimportant + requires changing habits, tools, etc. + fear of scoops + fear of exposure of flaws + IP/privacy issues, data moratoria, etc. + lack of tools, training, infrastructure + lack of support from journals, length limits, etc. + lack of standards? lack of shared lexicon? ] --- ### Stodden (2010) Survey of NIPS: <hr /> .left[ **Code** 77% 52% 44% 40% 34% N/A 30% 30% 20% ] .middle[ .center[ **Complaint/Excuse** Time to document and clean up Dealing with questions from users Not receiving attribution Possibility of patents Legal Barriers (ie. copyright) Time to verify release with admin Potential loss of future publications Competitors may get an advantage Web/disk space limitations ] ] .right[ **Data** 54% 34% 42% N/A 41% 38% 35% 33% 29% ] -- .full-width[ ### .red[I.e., fear, greed, ignorance, & sloth.] ] --- ### Hacking the limbic system: .red[If I say _just trust me_ and I'm wrong, I'm untrustworthy.] -- .blue[If I say _here's my work_ and it's wrong, I'm honest, human, and serving scientific progress.] --- ### "Rampant software errors undermine scientific results" David A.W. Soergel, 2014 http://f1000research.com/articles/3-303/v1 Abstract: Errors in scientific results due to software bugs are not limited to a few high-profile cases that lead to retractions and are widely reported. Here I estimate that in fact most scientific results are probably wrong if data have passed through a computer, and that these errors may remain largely undetected. The opportunities for both subtle and profound errors in software and data management are boundless, yet they remain surprisingly underappreciated. --- ## How can we do better? + Scripted analyses: no point-and-click tools, _especially_ spreadsheet calculations + Revision control systems + Documentation, documentation, documentation + Coding standards/conventions + Pair programming + Issue trackers + Code reviews (and in teaching, grade students' *code*, not just their *output*) + Code tests: unit, integration, coverage, regression --- ## Integration tests  http://imgur.com/qSN5SFR by Datsun280zxt --- ### Spreadsheets might be OK for data entry. -- ### But not for calculations. -- + Conflate input, output, code, presentation; facilitate & obscure error -- + According to KPMG and PWC, [over 90% of corporate spreadsheets have errors]([http://www.theregister.co.uk/2005/04/22/managing_spreadsheet_fraud/) -- + Not just errors: bugs in Excel too: +, *, random numbers, statistical routines -- + .red["Stress tests" of international banking system use Excel simulations!] --- ### Relying on spreadsheets for important calculations is like driving drunk: -- ### .red[No matter how carefully you do it, a wreck is likely.] --- ### Checklist 1. Don't use spreadsheets for calculations. 1. Script your analyses, including data cleaning and munging. 1. Document your code. 1. Record and report software versions, including library dependencies. 1. Use unit tests, integration tests, coverage tests, regression tests. 1. Avoid proprietary software that doen't have an open-source equivalent. 1. Report all analyses tried (transformations, tests, selections of variables, models, etc.) before arriving at the one emphasized. 1. Make code and code tests available. 1. Make data available in an open format; provide data dictionary. 1. Publish in open journals. --- ### Personal best, w Anne Boring & Kellie Ottoboni Code on [GitHub](https://github.com/kellieotto/SET-and-Gender-Bias), data on [Merritt](http://n2t.net/ark:/b6078/d1mw2k), pub in [ScienceOpen](https://www.scienceopen.com/document/vid/818d8ec0-5908-47d8-86b4-5dc38f04b23e)  --- ## Why open publication? + Research funded by agencies + Conducted at universities by faculty et al. + Refereed/edited for journal by faculty at no cost to journal + Pages charges paid by agencies + Libraries/readers have to pay to view! Exclusionary and morally questionable. --- #### [ARL 1986-2016](http://arl.nonprofitsoapbox.com/storage/documents/expenditure-trends.pdf) Also [CFUCBL rept](http://evcp.berkeley.edu/sites/default/files/FINAL_CFUCBL_report_10.16.13.pdf)  --- ## What's the role of a journal? + Gatekeeping/QC by editors & "peers" + Distribution & marketing + Archive -- Failing at (1); largely unnecessary for (2) and (3). --- ### Alternatives Open publication, post-publication non-anonymous review (e.g., [F1000Reseaarch](http://f1000research.com/), [ScienceOpen](https://www.scienceopen.com/))  --- ## Statistical reproducibility + what does it mean to reproduce a scientific result? + _P_-hacking; straw-man null hypotheses + changing endpoints http://compare-trials.org/ + the false discovery rate + _reproducibility_ vs. _preproducibility_ --- ## Reproducibility in teaching + Using Jupyter notebooks & GitHub for new teaching materials and tech talks, e.g. + https://github.com/pbstark/MX14 + https://github.com/pbstark/Padova15 + https://github.com/pbstark/Nonpar + https://github.com/pbstark/PhysEng + Lorena Barba has great examples, e.g., http://lorenabarba.com/blog/announcing-aeropython/  --- ### It's hard to teach an old dog new tricks. -- ### .blue[So work with puppies!] -- [Statistics 159/259](http://www.jarrodmillman.com/stat159-fall2015/); Statistics 222; DSE 421 [BIDS Reproducibility working group](http://bids.berkeley.edu/working-groups/reproducibility-and-open-science) (Case study book in progress!) [Data Carpentry](http://www.datacarpentry.org/), [Software Carpentry](http://software-carpentry.org/), others --- ### Pledge > A. _I will not referee any article that does not contain enough information to tell whether it is correct._ > B. _Nor will I submit any such article for publication._ > C. _Nor will I cite any such article published after 1/1/2017._ See also _Open science peer review oath_ http://f1000research.com/articles/3-271/v2 --- #### Recap + What does it mean to work reproducibly and transparently? + Show your work! -- + Why bother? + Right thing to do; evidence of correctness; re-use; wider reach -- + Whom does it benefit, and how? + you, your discipline, science: fewer errors, faster progress, "stand on shoulders", visibility -- + What will it cost me? + learn new, better work habits and perhaps tools -- + Will I need to learn new tools? + no, but it helps + your students probably already know the tools--encourage them (& learn from them)! --- #### Recap + What resources help? + [Data Carpentry](http://www.datacarpentry.org/), [Software Carpentry](http://software-carpentry.org/) + [RunMyCode](http://www.runmycode.org/), [Research Compendia](http://researchcompendia.org/), [FigShare](https://figshare.com/) + [Jupyter](http://jupyter.org/) (>40 languages!), [Sweave](https://www.statistik.lmu.de/~leisch/Sweave/), [RStudio](https://www.rstudio.com/), [knitr](http://yihui.name/knitr/) + [BCE](http://bce.berkeley.edu/), [Docker](https://www.docker.com/), [Virtual Box](https://www.virtualbox.org/), [AWS](https://aws.amazon.com/), ..., [GitHub](https://github.com/), [TravisCI](https://travis-ci.org/), [noWorkFlow](https://pypi.python.org/pypi/noworkflow), ... + Reproducibility initiative http://validation.scienceexchange.com/#/reproducibility-initiative + Best practices for scientific software dev http://arxiv.org/pdf/1210.0530v4.pdf + [Federation of American Societies for Experimental Biology](https://www.faseb.org/Portals/2/PDFs/opa/2016/FASEB_Enhancing%20Research%20Reproducibility.pdf) + Was ist open science? http://openscienceasap.org/open-science/ -- + What's the simplest thing I can do to make my work more reproducible? + Use the checklist + Start a repo for your next project + Ask your students for help -- + How can I move my discipline, my institution, and science as a whole towards reproducibility? + the pledges! + encourage students & postdocs to work reproducibly + don't grade assignments on output alone: check code + don't treat journal masthead as proxy for work quality: look + check actual evidence in hiring/promotion cases: don't beancount, ignore impact factor --- ## .blue[Show your work!] .center[_Perfection is impossible, but improvement is easy._]