Examples of Reading Web Pages with R

As an example of how to extract information from a web page, consider

the task of extracting the spring baseball schedule for the Cal Bears

from http://calbears.cstv.com/sports/m-basebl/sched/cal-m-basebl-sched.html .

1 Reading a web page into R

Read the contents of the page into a vector of character strings with the

readLines function:

> thepage = readLines('http://calbears.cstv.com/sports/m-basebl/sched/cal-m-basebl-sched.html')

Note: When you're reading a web page, make a local copy for testing;

as a courtesy to the owner of the web site whose pages you're using, don't

overload their server by constantly rereading the page.

To make a copy from inside of R, look at the download.file function.

You could also save

a copy of the result of using readLines, and practice on that until

you've got everything working correctly.

Now we have to focus in on what we're trying to extract. The first step

is finding where it is. If you look at the web page, you'll see that the

title "Opponent / Event" is right above the data we want. We can locate this line

using the grep function:

> grep('Opponent / Event',thepage)

[1] 357

If we look at the lines following this marker, we'll notice that the first

date on the schedule can be found in line 400, with the other information

following after:

> thepage[400:407]

[1] " <tr id=\"990963\" title=\"2009,1,21,16,00,00\" valign=\"TOP\" bgcolor=\"#d1d1d1\" class=\"event-listing\">"

[2] " "

[3] " <td class=\"row-text\">02/21/09</td>"

[4] " "

[5] " <td class=\"row-text\">vs. UC Riverside</td>"

[6] " "

[7] " <td class=\"row-text\">Berkeley, Calif.</td>"

[8] " "

Based on the previous step, the data that we want is always preceded by

the HTML tag "<td class="row-text»", and followed by

"</td>". Let's grab all the lines that have that pattern:

> mypattern = '<td class="row-text">([^<]*)</td>'

> datalines = grep(mypattern,thepage[400:length(thepage)],value=TRUE)

I used value=TRUE, so I wouldn't have to worry about the

indexing when I restricted myself to the lines from 400 on. Also notice that

I've already tagged the part that I want, in preparation to the final call to

gsub.

Now that I've got the lines where my data is, I can use grexpr,

then getexpr (from the previous lecture), and gsub to

extract the information without the HTML tags:

> getexpr = function(s,g)substring(s,g,g+attr(g,'match.length')-1)

> gg = gregexpr(mypattern,datalines)

> matches = mapply(getexpr,datalines,gg)

> result = gsub(mypattern,'\\1',matches)

> names(result) = NULL

> result[1:10]

[1] "02/21/09" "vs. UC Riverside" "Berkeley, Calif." "1:00 p.m. PT"

[5] "02/22/09" "vs. Vanderbilt" "Berkeley, Calif." "1:00 p.m. PT"

[9] "02/23/09" "vs. Vanderbilt"

It seems pretty clear that we've extracted just what we wanted - to make

it more usable, we'll convert it to a data frame and provide some titles.

Since it's hard to describe how to convert a vector to a data frame, we'll

use a matrix as an intermediate step. Since there are four pieces of

information (columns) for each game (row), a matrix is a natural choice:

> schedule = as.data.frame(matrix(result,ncol=4,byrow=TRUE))

> names(schedule) = c('Date','Opponent','Location','Result')

> head(schedule)

Date Opponent Location Result

1 02/21/09 vs. UC Riverside Berkeley, Calif. 1:00 p.m. PT

2 02/22/09 vs. Vanderbilt Berkeley, Calif. 1:00 p.m. PT

3 02/23/09 vs. Vanderbilt Berkeley, Calif. 1:00 p.m. PT

4 02/25/09 at Santa Clara Santa Clara, Calif. 6:00 p.m. PT

5 02/27/09 at Long Beach State Long Beach, Calif. 6:30 p.m. PT

6 02/28/09 at Long Beach State Long Beach, Calif. 2:00 p.m. PT

2 Another Example

At http://www.imdb.com/chart is a

box-office summary of the ten top movies, along with their gross profits

for the current weekend, and their total gross profits. We would like

to make a data frame with that information. As always, the first part of

the solution is to read the page into R, and use an anchor to find the

part of the data that we want. In this case, the table has column

headings, including one for "Rank".

> x = readLines('http://www.imdb.com/chart/')

> grep('Rank',x)

[1] 1141 1393 1651

Starting with line 1141 we can look at the data to see where

the information is. A little experimentation shows that the useful

data starts on line 1157:

> x[1157:1165]

[1] " <td class=\"chart_even_row\">"

[2] " <a href=\"/title/tt0989757/\">Dear John</a> (2010/I)"

[3] " </td>"

[4] " <td class=\"chart_even_row\" style=\"text-align: right; padding-right: 20px\">"

[5] " $30.5M"

[6] " </td>"

[7] " <td class=\"chart_even_row\" style=\"text-align: right\">"

[8] " $30.5M"

[9] " </td>"

There are two types of lines that contain useful data: the ones with the title, and

the ones that begin with some blanks followed by a dollar sign. Here's a regular

expression that will pull out both those lines:

> goodlines = '<a href="/title[^>]*>(.*)</a>.*$|^ *\\$'

> try = grep(goodlines,x,value=TRUE)

Looking at the beginning of try, it seems like we got what we want:

> try[1:10]

[1] " <a href=\"/title/tt1001508/\">He's Just Not That Into You</a> (2009)"

[2] " $27.8M"

[3] " $27.8M"

[4] " <a href=\"/title/tt0936501/\">Taken</a> (2008/I)"

[5] " $20.5M"

[6] " $53.6M"

[7] " <a href=\"/title/tt0327597/\">Coraline</a> (2009)"

[8] " $16.8M"

[9] " $16.8M"

[10] " <a href=\"/title/tt0838232/\">The Pink Panther 2</a> (2009)"

Sometimes the trickiest part of getting the data off a webpage is figuring

out exactly the part you need. In this case, there is a lot of extraneous information

after the table we want. By examining the output, we can see that we only want the

first 30 entries. We also need to remove the extra information from the title

line. We can use the sub function with a modified version of our regular

expression:

> try = try[1:30]

> try = sub('<a href="/title[^>]*>(.*)</a>.*$','\\1',try)

> head(try)

[1] " He's Just Not That Into You"

[2] " $27.8M"

[3] " $27.8M"

[4] " Taken"

[5] " $20.5M"

[6] " $53.6M"

Once the spaces at the beginning of each line are

removed, we can rearrange the data into a 3-column data frame:

> try = sub('^ *','',try)

> movies = data.frame(matrix(try,ncol=3,byrow=TRUE))

> names(movies) = c('Name','Wkend Gross','Total Gross')

> head(movies)

Name Wkend Gross Total Gross

1 Dear John $30.5M $30.5M

2 Avatar $22.9M $629M

3 From Paris with Love $8.16M $8.16M

4 Edge of Darkness $6.86M $28.9M

5 Tooth Fairy $6.63M $34.5M

6 When in Rome $5.55M $20.9M

3 Dynamic Web Pages

While reading data from static web pages as in the previous examples can be

very useful (especially if you're extracting data from many pages), the

real power of techniques like this has to do with dynamic pages, which accept

queries from users and return results based on those queries. For example,

an enormous amount of information about genes and proteins can be found at

the National Center of Biotechnology Information website

(http://www.ncbi.nlm.nih.gov/), much

of it available through query forms. If you're only performing a few queries,

it's no problem using the web page, but for many queries, it's beneficial

to automate the process.

Here is a simple example that illustrate the concept of accessing dynamic

information from web pages. The page

http://finance.yahoo.com provides information about

stocks; if you enter a stock symbol on the page, (for example

aapl for Apple Computer), you will be directed to a page whose

URL (as it appears in the browser address bar) is

http://finance.yahoo.com/q?s=aapl&x=0&y=0

The way that stock symbols are mapped to this URL is pretty

obvious. We'll write an R function that will extract the current price

of whatever stock we're interested in.

The first step in working with a page like this is to download

a local copy to play with, and to read the page into a vector of character

strings:

> download.file('http://finance.yahoo.com/q?s=aapl&x=0&y=0','quote.html')

trying URL 'http://finance.yahoo.com/q?s=aapl&x=0&y=0'

Content type 'text/html; charset=utf-8' length unknown

opened URL

.......... .......... .......... .........

downloaded 39Kb

> x = readLines('quote.html')

To get a feel for what we're looking for, notice that the words "Last Trade:"

appear before the current quote. Let's look at the line containing

this string:

> grep('Last Trade:',x)

25

> nchar(x[25])

[1] 873

Since there are over 800 characters in the line, we don't want

to view it directly. Let's use gregexpr to narrow down the search:

> gregexpr('Last Trade:',x[25])

[[1]]

[1] 411

attr(,"match.length")

[1] 11

This shows that the string "Last Trade:" starts at character 411.

We can use substr to see the relevant part of the line:

substring(x[25],411,500)

[1] "Last Trade:</th><td class=\"yfnc_tabledata1\"><big><b><span id=\"yfs_l10_aapl\">196.19</span><"

There's plenty of context - we want the part surrounded by <big><b><span ...

and </span>. One easy way to grab that part is to use a tagged

regular expression with gsub:

> gsub('^.*<big><b><span [^>]*>([^<]*)</span>.*$','\\1',x[25])

[1] "196.19"

This suggests the following function:

> getquote = function(sym){

+ baseurl = 'http://finance.yahoo.com/q?s='

+ myurl = paste(baseurl,sym,'&x=0&y=0',sep='')

+ x = readLines(myurl)

+ q = gsub('^.*<big><b><span [^>]*>([^<]*)</span>.*$','\\1',grep('Last Trade:',x,value=TRUE))

+ as.numeric(q)

+}

As always, functions like this should be tested:

> getquote('aapl')

[1] 196.19

> getquote('ibm')

[1] 123.21

> getquote('nok')

[1] 13.35

These functions provide only a single quote; a little exploration of the

yahoo finance website shows that we can get CSV files with historical

data by using a URL of the form:

http://ichart.finance.yahoo.com/table.csv?s=xxx

where xxx is the symbol of interest.

Since it's a comma-separated file, We can use read.csv to read the chart.

gethistory = function(symbol)

read.csv(paste('http://ichart.finance.yahoo.com/table.csv?s=',symbol,sep=''))

Here's a simple test:

> aapl = gethistory('aapl')

> head(aapl)

Date Open High Low Close Volume Adj.Close

1 2009-02-20 89.40 92.40 89.00 91.20 26780200 91.20

2 2009-02-19 93.37 94.25 90.11 90.64 32945600 90.64

3 2009-02-18 95.05 95.85 92.72 94.37 24417500 94.37

4 2009-02-17 96.87 97.04 94.28 94.53 24209300 94.53

5 2009-02-13 98.99 99.94 98.12 99.16 21749200 99.16

6 2009-02-12 95.83 99.75 95.83 99.27 29185300 99.27

Unfortunately, if we try to use the Date

column in plots, it will not work properly, since R has

stored it as a factor. The format of the date is the default

for the as.Date function, so we can modify our

function as follows:

gethistory = function(symbol){

data = read.csv(paste('http://ichart.finance.yahoo.com/table.csv?s=',symbol,sep=''))

data$Date = as.Date(data$Date)

data

}

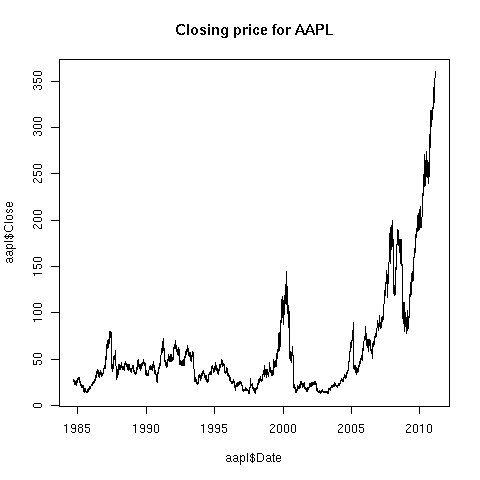

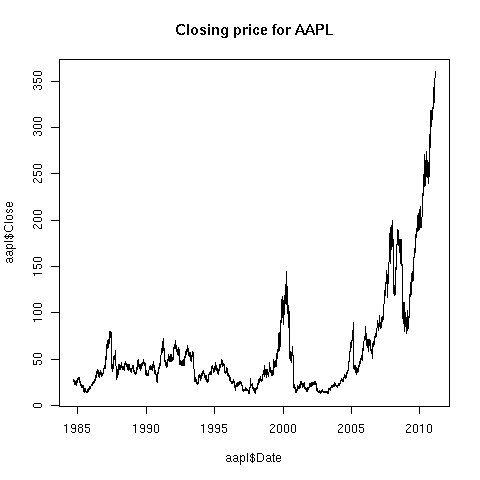

Now, we can produce plots with no problems:

> aapl = gethistory('aapl')

> plot(aapl$Date,aapl$Close,main='Closing price for AAPL',type='l')

The plot is shown below:

This suggest a function for plotting any of the variables in the CSV file:

This suggest a function for plotting any of the variables in the CSV file:

plothistory = function(symbol,what){

match.arg(what,c('Open','High','Low','Close','Volume','Adj.Close'))

data = gethistory(symbol)

plot(data$Date,data[,what],main=paste(what,'price for ',symbol),type='l')

invisible(data)

}

This function introduces several features of functions that we

haven't seen yet.

- The match.arg function lets us specify a list of acceptable values

for a parameter being passed to a function, so that an error will occur

if you use a non-existent variable:

> plothistory('aapl','Last')

Error in match.arg("what", c("Open", "High", "Low", "Close", "Volume", :

'arg' should be one of “Open”, “High”, “Low”, “Close”, “Volume”, “Adj.Close”

-

The invisible function prevents the returned value (data in

this case) from being printed when the output of the function is not

assigned, but also allows us to assign the output to a variable if we want

to use it latter:

> plothistory('aapl','Close')

will produce a plot without displaying the data to the screen, but

> aapl.close = plothistory('aapl','Close')

will produce the plot, and store the data in aapl.close.

4 Another Example

When using google, it's sometimes inconvenient to have to click through

all the pages. Let's write a function that will return the web links

from a google search. If you type a search time into google, for example

a search for 'introduction to r", you'll notice that the address bar of

your browser looks something like this:

http://www.google.com/search?q=introduction+to+r&ie=utf-8&oe=utf-8&aq=t&rls=com.ubuntu:en-US:unofficial&client=firefox-a

For our purposes, we only need the "q=" part of the

search. For our current example, that would be the URL

http://www.google.com/search?q=introduction+to+r

Note that, since blanks aren't allowed in URLs, plus signs are

used in place of spaces. If we were to click on the "Next" link at the

bottom of the page, the URL changes to something like

http://www.google.com/search?hl=en&safe=active&client=firefox-a&rls=com.ubuntu:en-US:unofficial&hs=xHq&q=introduction+to+r&start=10&sa=N

For our purposes, we only need to add the &start= argument

to the web page. Since google displays 10 results per page, the second page

will have start=10, the next page will have start=20, and

so on. Let's read in the first page of this search into R:

z = readLines('http://www.google.com/search?q=introduction+to+r')

Warning message:

In readLines("http://www.google.com/search?q=introduction+to+r") :

incomplete final line found on 'http://www.google.com/search?q=introduction+to+r'

As always, you can safely ignore the message about the incomplete

final line.

Since we're interested in the web links, we only want lines with

"href=" in them. Let's check how many lines we've got,

how long they are, and which ones contain the href string:

> length(z)

[1] 9

> nchar(z)

Error in nchar(z) : invalid multibyte string 3

The error message looks pretty bad - fortunately it's very easy

to fix. Basically, the error means that, for the computer's current setup,

there's a character that isn't recognized. We can force R to recognize

every character by issuing the following command:

> Sys.setlocale('LC_ALL','C')

[1] "LC_CTYPE=C;LC_NUMERIC=C;LC_TIME=C;LC_COLLATE=C;LC_MONETARY=C;LC_MESSAGES=en_US.UTF-8;LC_PAPER=en_US.UTF-8;LC_NAME=C;LC_ADDRESS=C;LC_TELEPHONE=C;LC_MEASUREMENT=en_US.UTF-8;LC_IDENTIFICATION=C"

The "UTF-8" means that all characters will now be recognized.

> nchar(z)

[1] 561 125 25086 351 131 520 449 5 17

> grep('href *=',z)

[1] 3

It's pretty clear that all of the links are on the third line.

Now we can construct a tagged regular expression to grab all the

links.

> hrefpat = 'href *= *"([^"]*)"'

> getexpr = function(s,g)substring(s,g,g+attr(g,'match.length')-1)

> gg = gregexpr(hrefpat,z[[3]])

> res = mapply(getexpr,z[[3]],gg)

> res = sub(hrefpat,'\\1',res)

> res[1:10]

[1] "http://images.google.com/images?q=introduction+to+r&um=1&ie=UTF-8&sa=N&hl=en&tab=wi"

[2] "http://video.google.com/videosearch?q=introduction+to+r&um=1&ie=UTF-8&sa=N&hl=en&tab=wv"

[3] "http://maps.google.com/maps?q=introduction+to+r&um=1&ie=UTF-8&sa=N&hl=en&tab=wl"

[4] "http://news.google.com/news?q=introduction+to+r&um=1&ie=UTF-8&sa=N&hl=en&tab=wn"

[5] "http://www.google.com/products?q=introduction+to+r&um=1&ie=UTF-8&sa=N&hl=en&tab=wf"

[6] "http://mail.google.com/mail/?hl=en&tab=wm"

[7] "http://www.google.com/intl/en/options/"

[8] "/preferences?hl=en"

[9] "https://www.google.com/accounts/Login?hl=en&continue=http://www.google.com/search%3Fq%3Dintroduction%2Bto%2Br"

[10] "http://www.google.com/webhp?hl=en"

We don't want the internal (google) links - we want external links which

will begin with "http://". Let's extract all the external links,

and then eliminate the ones that just go back to google:

> refs = res[grep('^https?:',res)]

> refs = refs[-grep('google.com/',refs)]

> refs[1:3]

[1] "http://cran.r-project.org/doc/manuals/R-intro.pdf"

[2] "http://74.125.155.132/search?q=cache:d4-KmcWVA-oJ:cran.r-project.org/doc/manuals/R-intro.pdf+introduction+to+r&cd=1&hl=en&ct=clnk&gl=us&ie=UTF-8"

[3] "http://74.125.155.132/search?q=cache:d4-KmcWVA-oJ:cran.r-project.org/doc/manuals/R-intro.pdf+introduction+to+r&cd=1&hl=en&ct=clnk&gl=us&ie=UTF-8"

If you're familiar with google, you may recognize these as the links to

google's cached results. We can easily eliminate them:

> refs = refs[-grep('cache:',refs)]

> length(refs)

[1] 10

We can test these same steps with some of the other pages from this

query:

> z = readLines('http://www.google.com/search?q=introduction+to+r&start=10')

Warning message:

In readLines("http://www.google.com/search?q=introduction+to+r&start=10") :

incomplete final line found on 'http://www.google.com/search?q=introduction+to+r&start=10'

> hrefpat = 'href *= *"([^"]*)"'

> getexpr = function(s,g)substring(s,g,g+attr(g,'match.length')-1)

> gg = gregexpr(hrefpat,z[[3]])

> res = mapply(getexpr,z[[3]],gg)

> res = sub(hrefpat,'\\1',res)

> refs = res[grep('^https?:',res)]

> refs = refs[-grep('google.com/',refs)]

> refs = refs[-grep('cache:',refs)]

> length(refs)

[1] 10

Once again, it found all ten links. This obviously suggests a

function:

googlerefs = function(term,pg=0){

getexpr = function(s,g)substring(s,g,g+attr(g,'match.length')-1)

qurl = paste('http://www.google.com/search?q=',term,sep='')

if(pg > 0)qurl = paste(qurl,'&start=',pg * 10,sep='')

qurl = gsub(' ','+',qurl)

z = readLines(qurl)

hrefpat = 'href *= *"([^"]*)"'

wh = grep(hrefpat,z)

gg = gregexpr(hrefpat,z[[wh]])

res = mapply(getexpr,z[[wh]],gg)

res = sub(hrefpat,'\\1',res)

refs = res[grep('^https?:',res)]

refs = refs[-grep('google.com/|cache:',refs)]

names(refs) = NULL

refs[!is.na(refs)]

}

Now suppose that we want to retreive the links for the first

ten pages of query results:

> links = sapply(0:9,function(pg)googlerefs('introduction to r',pg))

> links = unlist(links)

> head(links)

[1] "http://cran.r-project.org/doc/manuals/R-intro.pdf"

[2] "http://cran.r-project.org/manuals.html"

[3] "http://www.biostat.wisc.edu/~kbroman/Rintro/"

[4] "http://faculty.washington.edu/tlumley/Rcourse/"

[5] "http://www.stat.cmu.edu/~larry/all-of-statistics/=R/Rintro.pdf"

[6] "http://www.stat.berkeley.edu/~spector/R.pdf"

File translated from

TEX

by

TTH,

version 3.67.

On 17 Feb 2010, 15:39.

This suggest a function for plotting any of the variables in the CSV file:

This suggest a function for plotting any of the variables in the CSV file: