Hypothesis Testing

1 Hypothesis Testing

Much of classical statistics is concerned with the idea of hypothesis testing. This

is a formal framework that we can use to pose questions about a variety of topics in

a consistent form that lets us apply statistical techniques to make statements about

how results that we've gathered relate to questions that we're interested in. If we

carefully follow the rules of hypothesis testing, then we can confidently talk about

the probability of events that we've observed under a variety of hypotheses, and put

our faith in those hypotheses that seem the most reasonable. Central to the idea of

hypothesis testing is the notion of null and alternative hypotheses. We want to present

our question in the form of a statement that assumes that nothing has happened (the null

hypothesis) versus a statement that describes a specific relation or condition

that might better explain our data.

For example, suppose we've got a coin, and we want to find out if it's true, that is, if,

when we flip the coin, are we as likely to see heads as we are to see tails. A null

hypothesis for this situation could be

H0: We're just as likely to get heads as tails when we flip the coin.

A suitable alternative hypothesis might be:

Ha: We're more likely to see either heads or tails when we flip the coin.

An alternative hypothesis such as this is called two-sided, since we'll reject the null

hypothesis if heads are more likely or if tails are more likely. We could use a one-sided

alternative hypothesis like "We're more likely to see tails than heads," but unless you've

got a good reason to believe that it's absolutely impossible to see more heads than tails,

we usually stick to two-sided alternative hypotheses.

Now we need to perform an experiment in order to help test our hypothesis. Obviously,

tossing the coin once or twice and counting up the results won't help very much. Suppose

I say that in order to test the null hypothesis that heads are just as likely as tails,

I'm going to toss the coin 100 times and record the results. Carrying out this experiment,

I find that I saw 55 heads and 45 tails. Can I safely say that the coin is true?

Without some further guidelines, it would be very hard to say if this deviation from 50/50

really provides much evidence one way or the other. To proceed any further, we have to

have some notion of what we'd expect to see in the long run if the null hypothesis was true.

Then, we can come up with some rule to use in deciding if the coin is true or not, depending

on how willing we are to make a mistake. Sadly, we can't make statements with complete

certainty, because there's always a chance that, even if the coin was true, we'd happen to

see more heads than tails, or, conversely, if the coin was weighted, we might just happen

to see nearly equal numbers of heads and tails when we tossed the coin many times. The

way we come up with our rule is by stating some assumptions about what we'd expect to see

if the null hypothesis was true, and then making a decision rule based on those assumptions.

For tossing a fair coin (which is what the null hypothesis states), most statisticians

agree that the number of heads (or tails) that we would expect follows what is called a

binomial distribution. This distribution takes two parameters: the theoretical probability

of the event in question (let's say the event of getting a head when we toss the coin), and

the number of times we toss the coin. Under the null hypothesis, the probability is .5.

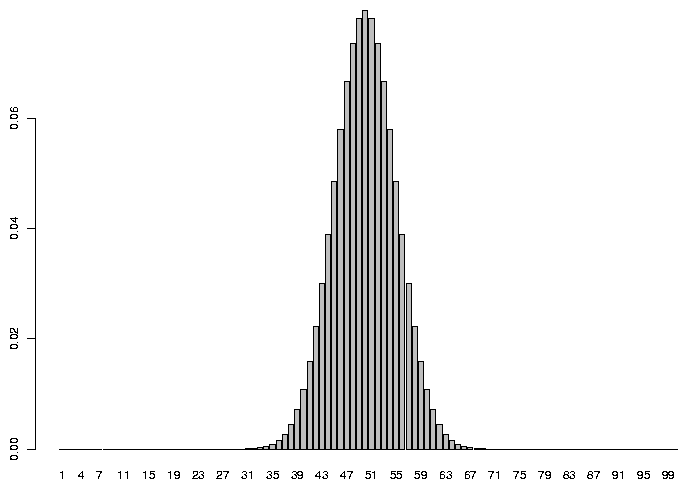

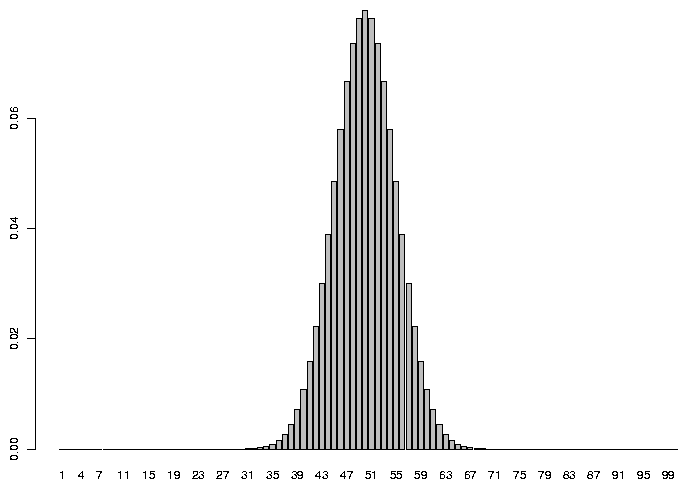

In R, we can see the expected probability of getting any particular number of heads if we

tossed a coin 100 times by plotting the

density function for the binomial distribution with parameters 100 and .5 .

> barplot(dbinom(1:100,100,.5),names.arg=1:100)

As you'd probably expect, the most common value we'd see would be 50, but there are

lots of reasonable values that aren't exactly 50 that would also occur pretty often.

So to make a decision rule for when we will reject the null hypothesis, we would

first decide how often we're willing to reject the null hypothesis when it was actually

true. For example, looking at the graph, there's a tiny probability that we'd see

as few as 30 heads or as many as 70 heads when we tossed a true coin 100 times.

But if we tossed a coin 100 times and only saw 30 heads, we'd be wise if we said the

coin wasn't true because 30 heads out of 100 is such a rare event. On the other hand,

we can see on the graph that the probability of getting, say 45 heads is still pretty

high, and we might very well want to accept the null hypothesis in this case.

Without any compelling reason to choose otherwise, people usually will accept an

error of 5% when performing a hypothesis test, although you might want to alter that

value in situations when making such an error is more or less of a problem than usual.

With a two-sided hypothesis like this one, to come up with a decision rule that would

be wrong only 5% of the time, we'd need to find the number of heads for which we'd

see fewer heads 2.5% of the time, and the number of heads for which we'd see more

heads 2.5% of the time. To find quantities like this for the binomial distribution,

we can use the qbinom function. The lower limit we want is the result of

calling qbinom with an argument of 0.025, and the upper limit is the result

of calling qbinom with an argument of 0.975:

As you'd probably expect, the most common value we'd see would be 50, but there are

lots of reasonable values that aren't exactly 50 that would also occur pretty often.

So to make a decision rule for when we will reject the null hypothesis, we would

first decide how often we're willing to reject the null hypothesis when it was actually

true. For example, looking at the graph, there's a tiny probability that we'd see

as few as 30 heads or as many as 70 heads when we tossed a true coin 100 times.

But if we tossed a coin 100 times and only saw 30 heads, we'd be wise if we said the

coin wasn't true because 30 heads out of 100 is such a rare event. On the other hand,

we can see on the graph that the probability of getting, say 45 heads is still pretty

high, and we might very well want to accept the null hypothesis in this case.

Without any compelling reason to choose otherwise, people usually will accept an

error of 5% when performing a hypothesis test, although you might want to alter that

value in situations when making such an error is more or less of a problem than usual.

With a two-sided hypothesis like this one, to come up with a decision rule that would

be wrong only 5% of the time, we'd need to find the number of heads for which we'd

see fewer heads 2.5% of the time, and the number of heads for which we'd see more

heads 2.5% of the time. To find quantities like this for the binomial distribution,

we can use the qbinom function. The lower limit we want is the result of

calling qbinom with an argument of 0.025, and the upper limit is the result

of calling qbinom with an argument of 0.975:

> qbinom(.025,100,.5)

[1] 40

> qbinom(.975,100,.5)

[1] 60

So in this experiment, if the number of heads we saw was between 40 and 60 (out of 100

tosses), we would tentatively accept the null hypothesis; formally we would say that there's

not enough evidence to reject the null hypothesis. If we saw fewer than 40 or more than

60 tosses, we would say we had enough evidence to reject the null hypothesis, knowing that

we would be wrong only 5% of the time. When we reject the null hypothesis when it was

actually true, it's said to be a Type I error. So in this example, we're setting the Type

I error rate to 5%.

To summarize, the steps for performing a hypothesis test are:

- Describe a null hypothesis and an alternative hypothesis.

-

Specify a significance or alpha level for the hypothesis test. This is the percent

of the time that you're willing to be wrong when you reject the null hypothesis.

-

Formulate some assumptions about the distribution of the statistic that's involved

in the hypothesis test. In this example we made the assumption that a fair coin follows

a binomial distribution with p=0.50.

-

Using the assumptions you made and the alpha level you decided on, construct the

rejection region for the test, that is, the values of the statistic for which you'll be

willing to reject the null hypothesis. In this example, the rejection region is broken

up into two sections: less than 40 heads and more than 60 heads.

Although it's not always presented in this way, one useful way to think about hypothesis

testing is that if you follow the above steps, and your test statistic is in the rejection

region then either the null hypothesis is not reasonable or your assumptions are wrong.

By following this framework, we can test a wide variety of hypotheses, and it's always

clear exactly what we're assuming about the data.

Most of hypothesis testing is focused on the null hypothesis, and on possibly rejecting it.

There are two main reasons for this. First, there is only one null hypothesis, so we don't

have to consider a variety of possibilities. Second, as we've seen, it's very easy to

control the error rate when rejecting the null hypothesis. The other side of the coin

has to do with the case where the null hypothesis is not true. This case is much more

complicated because there are many different ways to reject the null hypothesis, and it

would be naive to believe that they'd all behave the same way. When the null hypothesis

is not true, we might mistakenly accept the null hypothesis anyway. Just as we might see

an unusually high number of heads for a true coin, we might coincidentally see nearly equal

numbers of heads and tails with a coin that was actually weighted. The case where the

null hypothesis is not true, but we fail to reject it results in a Type II error.

Type II error is usually expressed as power, which is 1 minus the probability of making an

error. Power is the proportion of the time that you correctly reject the

null hypothesis when it isn't true. Notice that the power of a hypothesis test changes

depending on what the alternative is. In other words, it makes sense that if we had a

coin that was so heavily weighted that it usually had a probability of 0.8 of getting heads,

we'd have more power in detecting the discrepancy from the null distribution than if our

coin was only weighted to give a 0.55 probability of heads. Also, unlike Type I error, we

can't set a Type II error rate before we start the experiment - the only way to change

the Type II error rate is to get more samples or redesign the experiment.

File translated from

TEX

by

TTH,

version 3.67.

On 1 Apr 2009, 15:57.

As you'd probably expect, the most common value we'd see would be 50, but there are

lots of reasonable values that aren't exactly 50 that would also occur pretty often.

So to make a decision rule for when we will reject the null hypothesis, we would

first decide how often we're willing to reject the null hypothesis when it was actually

true. For example, looking at the graph, there's a tiny probability that we'd see

as few as 30 heads or as many as 70 heads when we tossed a true coin 100 times.

But if we tossed a coin 100 times and only saw 30 heads, we'd be wise if we said the

coin wasn't true because 30 heads out of 100 is such a rare event. On the other hand,

we can see on the graph that the probability of getting, say 45 heads is still pretty

high, and we might very well want to accept the null hypothesis in this case.

Without any compelling reason to choose otherwise, people usually will accept an

error of 5% when performing a hypothesis test, although you might want to alter that

value in situations when making such an error is more or less of a problem than usual.

With a two-sided hypothesis like this one, to come up with a decision rule that would

be wrong only 5% of the time, we'd need to find the number of heads for which we'd

see fewer heads 2.5% of the time, and the number of heads for which we'd see more

heads 2.5% of the time. To find quantities like this for the binomial distribution,

we can use the qbinom function. The lower limit we want is the result of

calling qbinom with an argument of 0.025, and the upper limit is the result

of calling qbinom with an argument of 0.975:

As you'd probably expect, the most common value we'd see would be 50, but there are

lots of reasonable values that aren't exactly 50 that would also occur pretty often.

So to make a decision rule for when we will reject the null hypothesis, we would

first decide how often we're willing to reject the null hypothesis when it was actually

true. For example, looking at the graph, there's a tiny probability that we'd see

as few as 30 heads or as many as 70 heads when we tossed a true coin 100 times.

But if we tossed a coin 100 times and only saw 30 heads, we'd be wise if we said the

coin wasn't true because 30 heads out of 100 is such a rare event. On the other hand,

we can see on the graph that the probability of getting, say 45 heads is still pretty

high, and we might very well want to accept the null hypothesis in this case.

Without any compelling reason to choose otherwise, people usually will accept an

error of 5% when performing a hypothesis test, although you might want to alter that

value in situations when making such an error is more or less of a problem than usual.

With a two-sided hypothesis like this one, to come up with a decision rule that would

be wrong only 5% of the time, we'd need to find the number of heads for which we'd

see fewer heads 2.5% of the time, and the number of heads for which we'd see more

heads 2.5% of the time. To find quantities like this for the binomial distribution,

we can use the qbinom function. The lower limit we want is the result of

calling qbinom with an argument of 0.025, and the upper limit is the result

of calling qbinom with an argument of 0.975: